In this article, we will talk about the comprehensive roadmap and tips for aspiring Data Engineer Professionals. As I was looking to transition from an entry-level Data analyst to a Data Engineer, this roadmap helping me to achieve the goal and create a mindset to become a successful engineer.

There are different ways to become a data engineer even if you are from a non-technical background. Start from the basics of data engineering, learn a programming language or two, and learn the processing tools, frameworks, and ETL pipelines. Most importantly, apply this learning by doing real-world projects and showcasing them in your portfolio. Following these will surely get you a good-paying job as a Data Engineer.

Roadmap for becoming a Data Engineer:

- Understand the Basics of Data Engineering:

- Learn about databases, data structures, and algorithms.

- Familiarize yourself with data modelling concepts such as relational, dimensional, and NoSQL databases. (An overview of what is data engineering and its fundamentals will help)

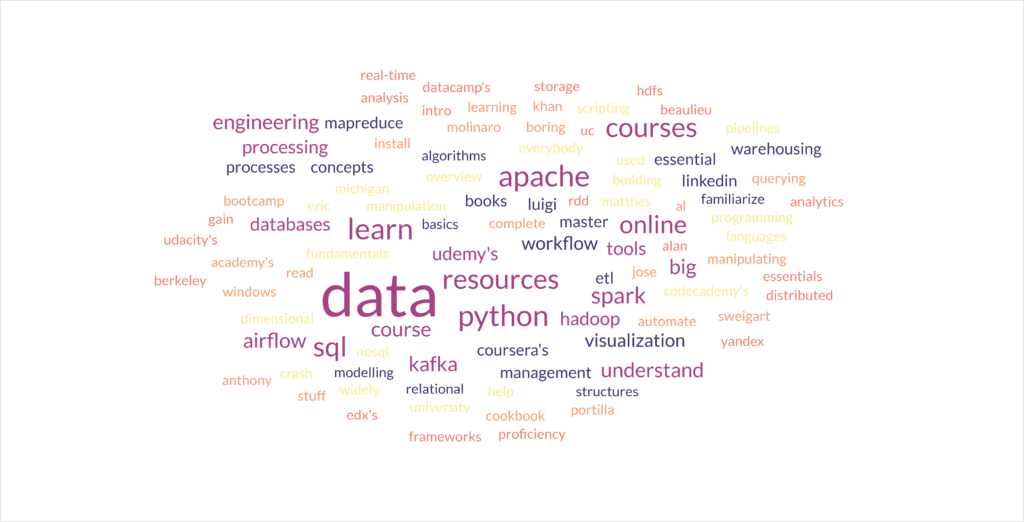

- Master Programming Languages:

- Learn Python: Python is widely used in data engineering for data manipulation, scripting, and building data pipelines. Resources:

- Online courses: Coursera’s “Python for Everybody” by the University of Michigan, Codecademy’s Python course.

- Books: “Python Crash Course” by Eric Matthes, “Automate the Boring Stuff with Python” by Al Sweigart.

- Also read: How to install Python in Windows

- Learn SQL: SQL is essential for querying and manipulating data in databases. Resources:

- Online courses: Udemy’s “The Complete SQL Bootcamp” by Jose Portilla, Khan Academy’s SQL course.

- Books: “SQL Cookbook” by Anthony Molinaro, “Learning SQL” by Alan Beaulieu.

- Learn Python: Python is widely used in data engineering for data manipulation, scripting, and building data pipelines. Resources:

- Gain Proficiency in Data Processing Tools and Frameworks:

- Apache Hadoop: Learn about distributed processing and storage with Hadoop. Resources:

- Online courses: Coursera’s “Big Data Essentials: HDFS, MapReduce, and Spark RDD” by Yandex, Udacity’s “Intro to Hadoop and MapReduce” course.

- Apache Spark: Master Spark for big data processing and analytics. Resources:

- Online courses: edX’s “Big Data Analysis with Spark SQL” by UC Berkeley, DataCamp’s Spark courses.

- Apache Kafka: Understand real-time data streaming with Kafka. Resources:

- Online courses: LinkedIn Learning’s “Apache Kafka Essential Training” by Ben Sullins, Udemy’s “Apache Kafka Series” by Stephane Maarek.

- Apache Hadoop: Learn about distributed processing and storage with Hadoop. Resources:

- Learn Data Pipeline Orchestration and Workflow Management:

- Apache Airflow: Study workflow automation and scheduling with Airflow. Resources:

- Online courses: Udemy’s “Apache Airflow: The Hands-On Guide” by John R. Griffiths, Pluralsight’s “Getting Started with Apache Airflow” by Janos Haber.

- Luigi: Explore another popular workflow management tool. Resources:

- Documentation and tutorials are available on the Luigi website

- Apache Airflow: Study workflow automation and scheduling with Airflow. Resources:

- Acquire Knowledge of Data Warehousing and ETL Processes:

- Understand ETL (Extract, Transform, Load) processes and techniques.

- Learn about data warehousing concepts and tools such as Amazon Redshift, Google BigQuery, and Snowflake.

- Develop Skills in Data Visualization and Reporting:

- Learn data visualization tools like Tableau, Power BI, or Python libraries like Matplotlib and Seaborn.

- Understand principles of effective data visualization and storytelling with data.

- Stay Updated and Continuously Learn:

- Follow industry blogs, forums, and communities like Stack Overflow, Reddit’s r/datascience, and LinkedIn groups.

- Attend conferences, webinars, and meetups related to data engineering and big data technologies.

Tips for Success:

- Practice regularly with hands-on projects and real-world datasets.

- Collaborate with peers on data-related projects to gain practical experience.

- Build a strong online presence through GitHub contributions, blog posts, or participating in relevant discussions.

- Network with professionals in the field through LinkedIn, conferences, and local meetups.

- Stay curious and keep exploring new technologies and techniques in data engineering.

Conclusion:

Embarking on the journey to become a successful Data Engineer requires a combination of foundational knowledge, technical skills, and practical experience. By following the roadmap outlined above and leveraging the suggested resources and tips, you can build a solid foundation in data engineering and position yourself for success in this rapidly evolving field.

As you progress on your path to becoming a data engineer, embrace challenges as opportunities for growth and innovation. With dedication, perseverance, and a passion for data, you can carve out a rewarding career in data engineering and contribute to the transformative power of data-driven decision-making in organizations worldwide.